We are thrilled to announce that our lab’s latest paper, “A Survey on Proactive Deepfake Defense: Disruption and Watermarking,” has been accepted for publication in the prestigious journal ACM Computing Surveys (CSUR).

Our Focus: Proactive Defense

Our survey provides a comprehensive review of proactive defense mechanisms. These strategies are designed to prevent the generation of malicious synthetic content at its source, before the harm can occur.

We focus on two main categories of proactive defenses:

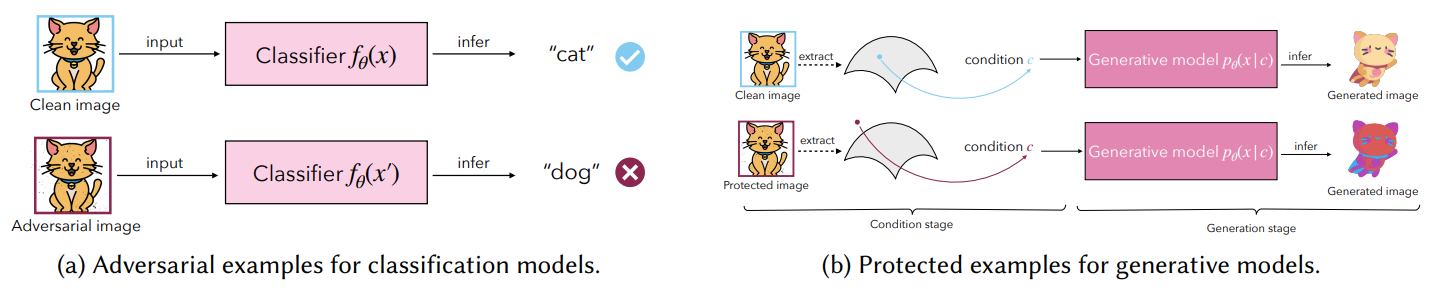

- Disruption: This approach protects an individual's data (like photos or audio clips) by introducing tiny, imperceptible perturbations. These "cloaked" data samples prevent generative AI models from being able to exploit them for creating deepfakes, empowering users to control how their data is used.

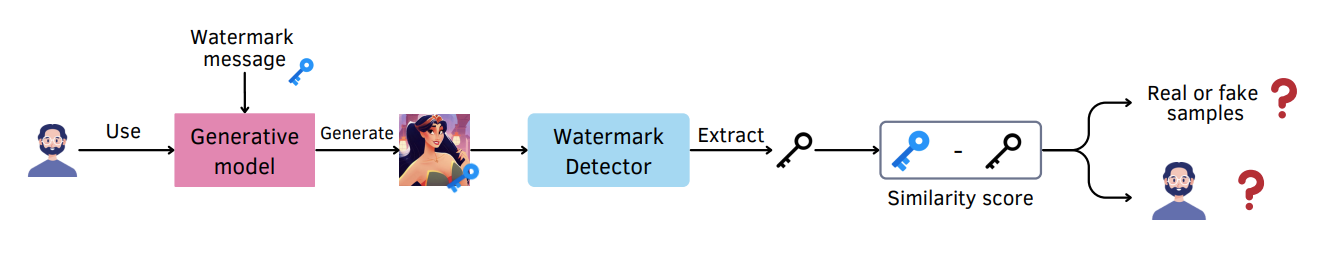

- Watermarking: This technique embeds a verifiable, hidden message into either the data itself or the AI models. This allows for content authentication and attribution, making it possible to trace a piece of synthetic content back to its source.

Publication Details

Title: A Survey on Proactive Deepfake Defense: Disruption and Watermarking

Authors: Hong-Hanh Nguyen-Le, Van-Tuan Tran, Thuc Nguyen, and Nhien-An Le-Khac

Journal: ACM Computing Surveys (CSUR)

DOI: https://doi.org/10.1145/3771296

This research was conducted with the financial support of Science Foundation Ireland under Grant number 18/CRT/6183.